|

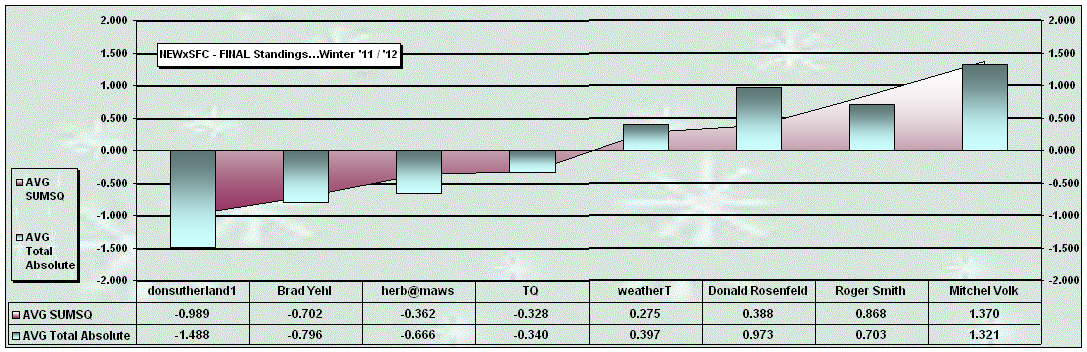

NEWxSFC - FINAL

Summary…Winter '11 / '12 |

AVG SUMSQ |

AVG STP |

AVG Total Absolute |

AVG Absolute |

Mean RSQ |

|

||||||||||||||||||||

|

Previous Ranks |

Rank |

Forecaster |

Class |

Total STN 4casts |

Error (") |

Error Z |

% MPRV over AVG |

Rank |

4cast (") |

Error |

Error Z |

% MPRV over AVG |

Rank |

Error (") |

Error Z |

% MPRV over AVG |

Rank |

Error (") |

Error Z |

%MPRV over AVG |

Rank |

RSQ |

RSQ Z |

% MPRV over AVG |

Rank |

Forecaster |

|

2 |

1 |

donsutherland1 |

Chief |

37 |

58 |

-0.989 |

50% |

2 |

50.5 |

8.9 |

-0.406 |

29% |

4 |

20.25 |

-1.488 |

34% |

2 |

1.21 |

-1.181 |

30% |

2 |

78.5% |

1.086 |

34% |

1 |

donsutherland1 |

|

3 |

2 |

Brad Yehl |

Intern |

37 |

77 |

-0.702 |

35% |

3 |

49.1 |

10.4 |

-0.267 |

11% |

5 |

25.07 |

-0.796 |

18% |

3 |

1.51 |

-0.544 |

14% |

4 |

73.3% |

0.830 |

24% |

3 |

Brad Yehl |

|

1 |

3 |

herb@maws |

Senior |

37 |

109 |

-0.362 |

19% |

5 |

48.2 |

17.1 |

0.532 |

-45% |

7 |

25.77 |

-0.666 |

17% |

4 |

1.64 |

-0.557 |

12% |

4 |

74.9% |

0.860 |

28% |

3 |

herb@maws |

|

5 |

4 |

TQ |

Senior |

39 |

94 |

-0.328 |

16% |

4 |

48.2 |

11.3 |

-0.124 |

10% |

6 |

28.22 |

-0.340 |

8% |

4 |

1.56 |

-0.378 |

10% |

5 |

62.1% |

0.212 |

5% |

6 |

TQ |

|

4 |

5 |

weatherT |

Senior |

37 |

146 |

0.275 |

-13% |

7 |

40.8 |

18.7 |

0.741 |

-55% |

8 |

33.27 |

0.397 |

-8% |

8 |

2.07 |

0.475 |

-14% |

8 |

49.5% |

-0.642 |

-14% |

8 |

weatherT |

|

7 |

6 |

Donald Rosenfeld |

Senior |

39 |

128 |

0.388 |

-21% |

7 |

72.3 |

12.9 |

0.051 |

-6% |

6 |

37.65 |

0.973 |

-24% |

8 |

2.08 |

0.958 |

-23% |

8 |

49.9% |

-0.393 |

-17% |

7 |

Donald Rosenfeld |

|

8 |

7 |

Roger Smith |

Journeyman |

45 |

143 |

0.868 |

-46% |

7 |

42.9 |

16.6 |

0.544 |

-26% |

5 |

35.90 |

0.703 |

-19% |

7 |

1.62 |

0.208 |

-2% |

5 |

37.1% |

-1.061 |

-39% |

8 |

Roger Smith |

|

6 |

8 |

Mitchel Volk |

Senior |

36 |

226 |

1.370 |

-67% |

9 |

70.8 |

11.3 |

-0.113 |

12% |

5 |

39.65 |

1.321 |

-28% |

9 |

2.66 |

1.510 |

-42% |

9 |

44.6% |

-0.970 |

-22% |

8 |

Mitchel Volk |

There were two (2) snowstorm

forecasting Contests during the ’11 / ’12 season. Under the ‘two-thirds’ rule…forecasters who entered two (2)

forecasts were included in the final standings.

To qualify for ranking in

the final ‘End-of-Season’ standings…a forecaster must enter at least two-thirds

of all Contests. If a forecaster has made more than enough forecasts to

qualify for ranking…only the lowest SUMSQ Z-scores necessary to qualify are

used in the computing the average. IOW…if you made nine forecasts…only

your six best SUMSQ Z-scores are used to evaluate your season-to-date performance.

You can think of it as dropping the worse quiz score before your final

grade is determined. The reason we have this rule is to 1) make it

possible to miss entering a forecast or two throughout the season and still be

eligible for Interim and ‘End-of Season’ ranking and 2) encourage forecasters

to take on difficult and/or late-season storms without fear about how a

bad forecast might degrade their overall 'season-to-date' performance score(s).

The mean normalized ‘SUMSQ

error’ is the Contest/s primary measure of forecaster performance.

This metric measures how well the forecaster/s expected snowfall

'distribution and magnitude' for _all_ forecast stations captured the

'distribution and magnitude' of _all_ observed snowfall amounts. A

forecaster with a lower average SUMSQ Z Score has made more skillful forecasts

than a forecaster with higher average SUMSQ Z Score.

The 'Storm Total

Precipitation error’ statistic is the absolute arithmetic difference

between a forecaster/s sum-total snowfall for all stations and the observed

sum-total snowfall. This metric…by

itself…is not a meaningful measure of skill…but can provide additional insight

of forecaster bias.

The 'Total Absolute

error' statistic is the average of your forecast errors regardless of whether

you over-forecast or under-forecast.

This metric measures the magnitude of your errors.

The 'Average Absolute

Error' is the forecaster/s ‘Total Absolute Error’ divided by the

number of stations where snow was forecast or observed.

The ‘RSQ error’ statistic

is a measure of the how well the forecast captured the variability of the

observed snowfall. Combined with the

SUMSQ error statistic…RSQ provides added information about how strong the

forecaster/s ‘model’ performed.