---

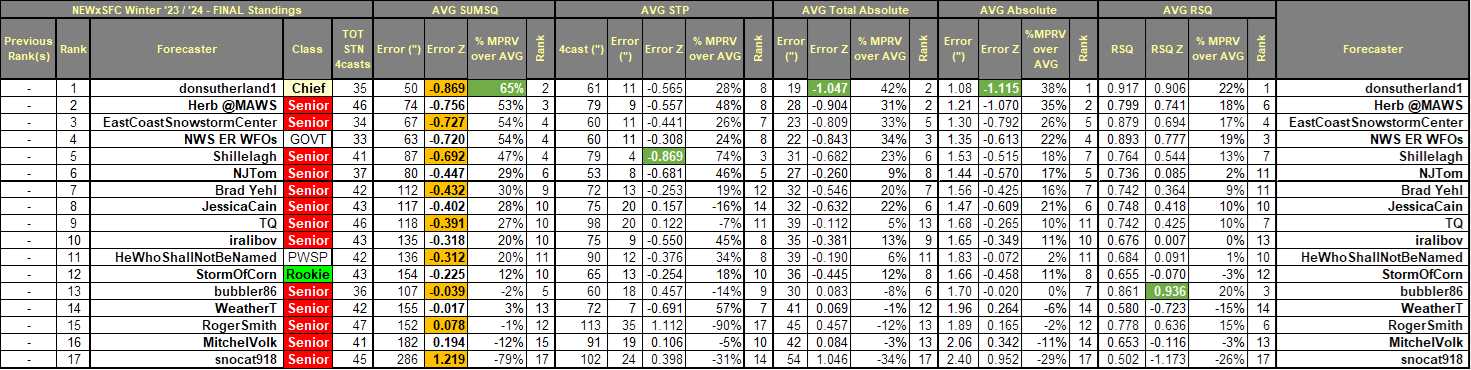

FINAL standings after THREE contest-worthy snow storms

Under the ‘two-thirds’ rule … forecasters who entered at least

TWO forecasts are included in these FINAL standings.

---

To qualify for ranking in the Interim and final ‘End-of-Season’

standings … a forecaster must enter at least two-thirds of all Contests.

If a forecaster has made more than enough forecasts to qualify for ranking … only

the lowest SUMSQ Z-scores necessary to qualify are used in the computing the

average. IOW … if you made nine forecasts … only your six best SUMSQ

Z-scores are used to evaluate your season-to-date performance. You can

think of it as dropping the worse test score before your final grade is

determined.

The reasons we have this rule:

1) makes it possible

to miss entering a forecast or two throughout the season and still be eligible

for Interim and ‘End-of Season’ ranking and

2) encourage

forecasters to take on difficult and/or late-season storms without

fear about how a bad forecast might degrade their overall 'season-to-date'

performance score(s).

---

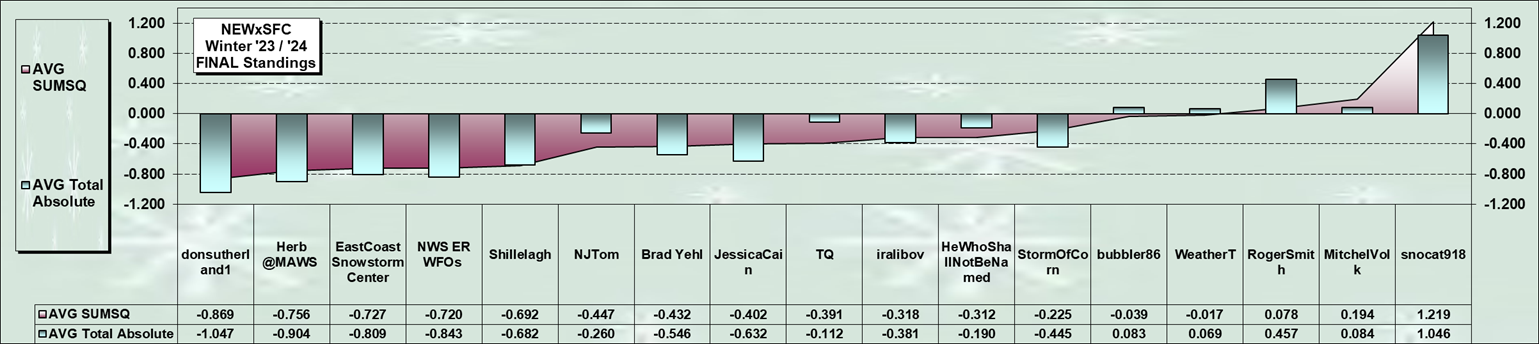

The average normalized ‘SUMSQ error’ is the Contest/s

primary measure of forecaster performance.

This metric measures how well the forecaster/s expected

snowfall 'distribution and magnitude' for _all_ forecast stations captured the

'distribution and magnitude' of _all_ observed snowfall amounts.

A forecaster with a lower average SUMSQ Z Score has made

more skillful forecasts than a forecaster with higher average SUMSQ Z

Score.

---

The 'Storm Total Precipitation error’ statistic is

the absolute arithmetic difference between a forecaster/s sum-total snowfall

for all stations and the observed sum-total snowfall. This metric … by itself …is not a meaningful

measure of skill …but can provide additional insight of forecaster bias.

---

The 'Total Absolute error' statistic is the average

of your forecast errors regardless of whether you over-forecast or

under-forecast.

This metric measures the magnitude of a forecast’s errors.

----

The 'Average Absolute Error' is the forecaster/s ‘Total

Absolute Error’ divided by the number of stations where snow was forecast

or observed.

---

The ‘RSQ error’ (R-squared – coefficient of

determination) statistic is a measure of the how well the forecast captured the

variability of the observed snowfall.

Combined with the SUMSQ error statistic … RSQ provides added

information about how strong the forecaster/s ‘model’ performed.

---

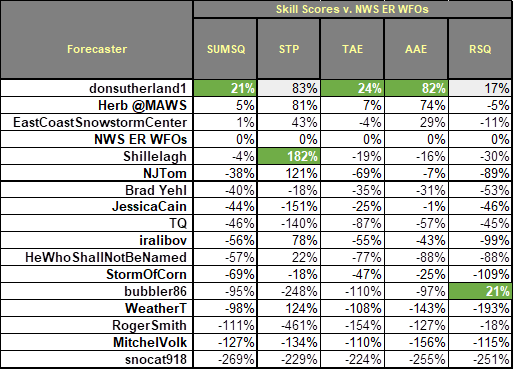

The ‘Skill score’ measures forecaster performance by comparing various

Z-Scores against a standard (NWS ER WFOs). Positive (negative) values

indicate better (worse) performance compared to the standard performance. 0% for NWS does not indicate no skill. GREEN highlights the best score in a

category.